¶ 1. Interoperability

Kavouras and Kokla (2007) state that

“interoperability is the ability of systems or products to operate effectively and efficiently in conjunction, on the exchange and reuse of available resources, services, procedures, and information, in order to fulfil the requirements of a specific task. […] It is not exhausted with integration, but also involves means of intelligent communication such as querying, extraction, transformation etc.”

The European Interoperability Framework (EU, 2017), intended to provide recommendations and guidance to support a shared an interoperable digital environment for communication and exchange of data with the public administrations in Europe, identifies four interoperability layers:

- technical;

- semantic;

- organizational; and

- legal interoperability.

For the European Interoperability Framework, ‘Interoperability’ is “the ability of organisations to interact towards mutually beneficial goals, involving the sharing of information and knowledge between these organisations, through the business processes they support, by means of the exchange of data between their ICT systems” (EU, 2017).

Simplifying the concept, interoperability can be considered as a characteristic of single dataset, determining their reusability across systems (e.g., for technical interoperability, their potential for being consistently imported-exported by software) (Noardo et al., 2021a,b).

Semantic interoperability, although being mainly a technical issue, has strict relationship and influence on the human side of interoperability as well, concerning data interpretation and description for reuse.

Several frameworks define guidance on how to manage data in order to support an enhanced concept of interoperability, including accessibility, findability and reuse as well. Some relevant examples are reported here: the European Interoperability Framework principles and recommendations (Table 1); the Data Management Principles provided by the Group on Earth Observations System of Systems (GEOSS) , aimed at a good management of Earth Observation data (Table 2); and the Findability, Accessibility, Reusability and Interoperability (FAIR) principles, intended to support good sharing of data in science (Wilkinson et al. 2016) (Table 3).

¶ 2. Integration

Integration is the combination or conflation of information from different datasets (Worboys & Duckham, 2004).

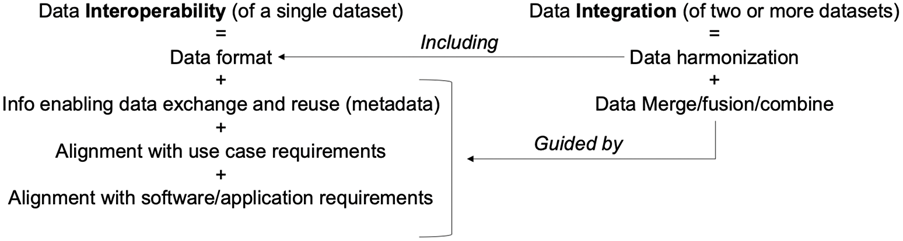

Figure 1 depicts what the two concepts of ‘interoperability’ and ‘integration’ entail and how are they related to each other.

Figure 1. Data interoperability vs. data integration. (Noardo, 2022)

¶ 3. The proposed workflow and framework for multisource data Integration

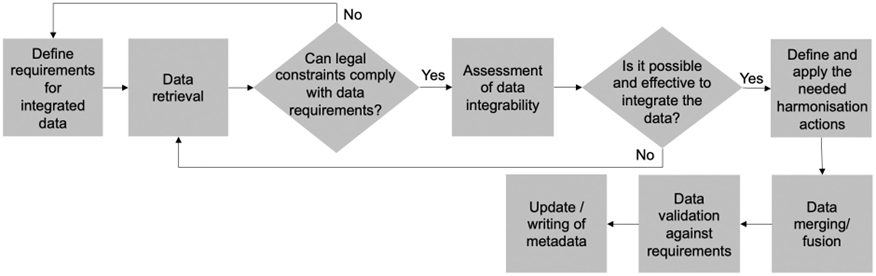

Noardo (2022) summarizes a workflow for an effective methodology for data integration as in Figure 1. It starts with the definition of requirements, go through data retrieval and possible processing to harmonise the different sources, data merging, validation of the data, to get to the final phase regarding the update of metadata after data merging.

Figure 2. A workflow for suitable data integration.

An effective data integration can only be planned and obtained whether a specific use case is considered. Therefore, the essential starting point is the definition of the requirements for the data to be obtained after the integration.

After a positive assessment of data integrability, harmonization actions must be chosen and applied for each considered aspect, among which:

- Conversion - if the data are very similar in content, but simply another format is necessary;

- Generalization - whether the destination dataset is less detailed than the origin one;

- Enrichment – if the destination data are more detailed and with higher quality and contents than the origin one.

After a proper harmonisation processing is applied to the origin dataset to make it fully consistent with the destination data requirements, data fusion operations will then allow obtaining the integrated dataset. Data validation and update of metadata to keep track of the applied processing, through a proper provenance tracking are the final steps.

¶ 4. References

- EU, 2017. New European Interoperability Framework – Promoting seamless services and data flows for European public administrations ISBN 978-92-79-63756-8 doi:10.2799/78681

- Kavouras, M., & Kokla, M. (2007). Theories of geographic concepts: ontological approaches to semantic integration. CRC Press.

- Noardo, F. 2022. Multisource Spatial Data Integration for Use Cases Applications. Transactions in GIS. 26, 7 pp. 2874-2913 https://doi.org/10.1111/tgis.12987

- Noardo, F., Krijnen, T., Arroyo Ohori, K., Biljecki, F., Ellul, C., Harrie, L., ... & Stoter, J. (2021a). Reference study of IFC software support: The GeoBIM benchmark 2019—Part I. Transactions in GIS, 25(2), 805-841.

- Noardo, F., Arroyo Ohori, K., Biljecki, F., Ellul, C., Harrie, L., Krijnen, T., ... & Stoter, J. (2021b). Reference study of CityGML software support: The GeoBIM benchmark 2019—Part II. Transactions in GIS, 25(2), 842-868.

- Wilkinson, M. D., Dumontier, M., Aalbersberg, I. J., Appleton, G., Axton, M., Baak, A., ... & Mons, B. (2016). The FAIR Guiding Principles for scientific data management and stewardship. Scientific data, 3(1), 1-9.

- Worboys, M. F., & Duckham, M. (2004). GIS: a computing perspective. CRC press.

¶ Data requirements

Defining Data Requirements is the process to identify, prioritize, precisely formulate and validate the data necessary to achieve specific business objectives .

To enable any interoperable process, the definition of data requirements is essential, it supports transparency and allows a proper and effective data retrieval, re-use and processing, including multisource data integration.

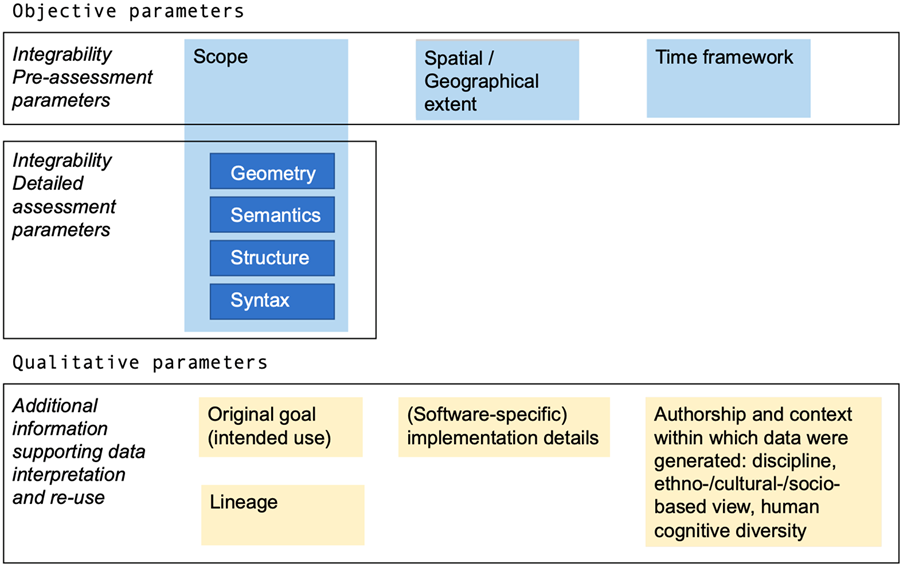

Several parameters have to be considered, related to the different aspects of data. Such data characteristics should be always explicitly prescribed by data requirements in case of data acquisition and modelling, or harvesting; as well as described in metadata. Noardo (2022) summarises parameters which were defined in literature (Doerr, 2004; Kavouras & Kokla, 2007; Worboys & Duckham, 2004) as well as recognised as more and more important for enabling interoperability ecosystems, such as data spaces and digital twins (DSSC, 2024).

Figure 3 summarizes the parameters to be considered for data to be reciprocally integrated. In particular, the objective parameters in the picture, which are further specified in Figure 4, are the features of data to be considered as data requirements.

Figure 3. Parameters to be considered to assess and prepare effective data integration (Noardo, 2022)

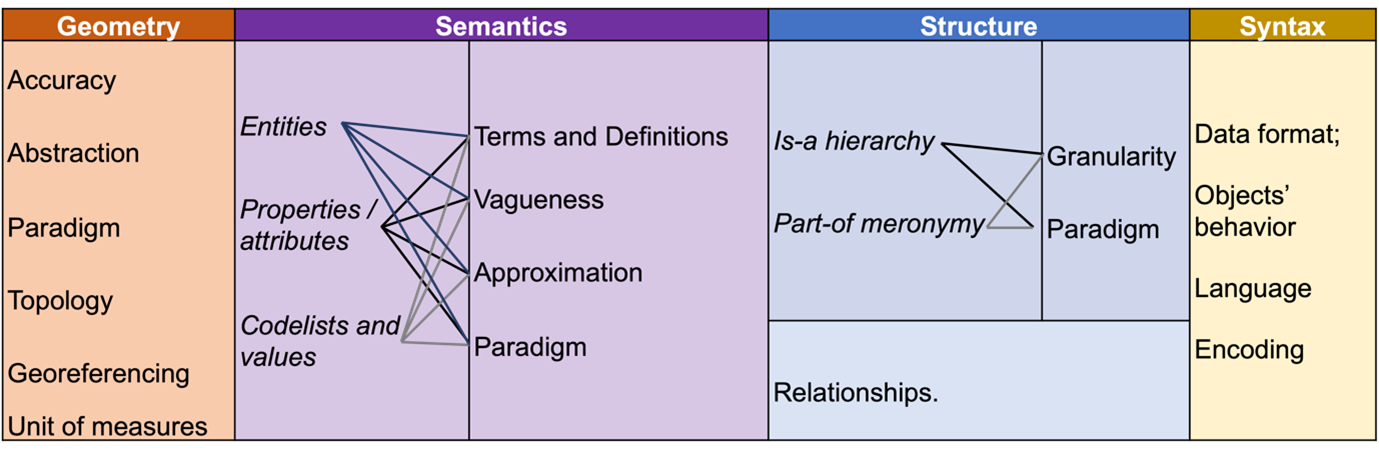

Figure 4. Synthesis of parameters for data integration potential assessment

Besides the geometrical parameters, which are essential, although often underestimated, resources on spatial data management and integration distinguish between “semantic” level (i.e., difference in conceptualization and definition, including terms used, specific meaning and classifications); “structural” or “schematic” level (i.e., the conceptual model or schema structuring the data, relations between entities and attributes, relationships, and hierarchies); and “syntactical” level (i.e., the format of the data) (Noardo, 2022, Doerr, 2004; Kavouras & Kokla, 2007; Worboys & Duckham, 2004, Mohammadi et al., 2006).

¶ 5. Standards and tools to define data requirements

Some standards propose guidelines to define data requirements. In the building and civil engineering works domain, the concept of Level Of Information Need is defined by the ISO 19650-1:2018 for information stored in BIM. buildingSMART has defined the Information Delivery Specification (IDS) standard to define the exchange requirements in a computer interpretable format, to define Industry Foundation Classes-based data requirements and allowing data validation .

In the geospatial domain, data requirements are equally important, and depend on the use case for which data are intended (Malinowski & Zimányi, 2006). ISO 19131 “Data product specification” defines another reference for geographic data products in particular.

Some tools are being provided and currently developed to support data requirements definition for different fields and to support users in referring to standards consistently and comprehensively when defining such data requirements.

G-reqs: Geospatial in-situ requirements is a tool developed within the European project InCase in order to define data requirements related to in-situ Earth Observation data.

The OGC Data Exchange Toolkit is being developed to facilitate data requirements definition for 3D city models, and more in general, to data which are structured according to standard data models and semantics. It leverages semantics technologies and enable automatic reference to unique identifiers to standards and standards components, as well as data validation against the defined profile (Noardo et al., 2023).

¶ 6. References

- DSSC, 2024. Blueprint Version 1.0 https://dssc.eu/space/BVE/357073006/Data+Spaces+Blueprint+v1.0

- Doerr, M. (2004). Semantic interoperability: Theoretical considerations. Technical Report 345, ICS-FORTH.

- Noardo, F., Atkinson, R., Simonis, I., Villar, A., & Zaborowski, P. (2023). OGC Data Exchange Toolkit: Interoperable and Reusable 3D Data at the End of the OGC Rainbow. In International 3D GeoInfo Conference (pp. 761-779). Cham: Springer Nature Switzerland.

- Kavouras, M., & Kokla, M. (2007). Theories of geographic concepts: ontological approaches to semantic integration. CRC Press.

- Noardo, F. 2022. Multisource Spatial Data Integration for Use Cases Applications. Transactions in GIS. 26, 7 pp. 2874-2913 https://doi.org/10.1111/tgis.12987

- Worboys, M. F., & Duckham, M. (2004). GIS: a computing perspective. CRC press.

- Mohammadi, H., Binns, A., Rajabifard, A., & Williamson, I. P. (2006). Spatial data integration. Seventeenth UN Regional Cartographic Conference for Asia and the Pacific, Bangkok, Thailand (pp. 1–12). http://hdl.handle.net/11343/26704

- Malinowski, E., & Zimányi, E. (2006). Requirements specification and conceptual modeling for spatial data warehouses. In R. Meerman, Z. Tari, & P. Herrero (Eds.), On the move to meaningful Internet systems (pp. 1616–1625). Springer. https://doi.org/10.1007/11915072_68